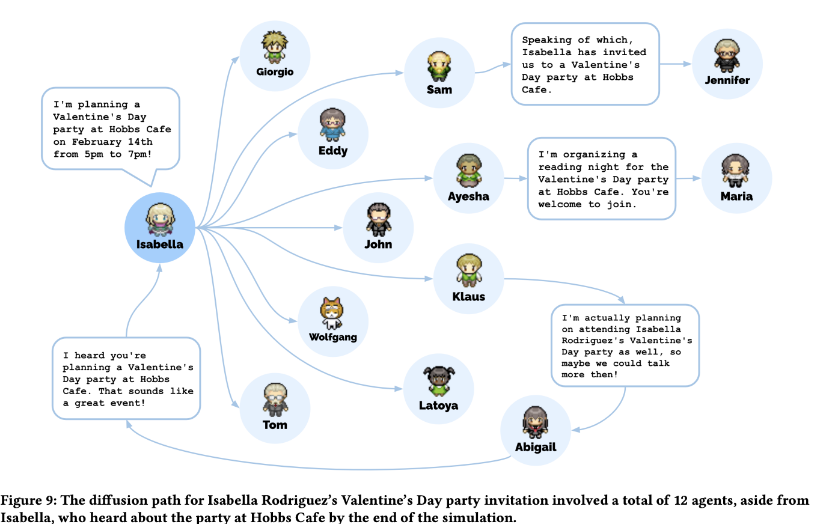

Past multi-agent simulations have focused on several days in the

life of AI agents living in a simulated town. Those agents have

light tension between each other but in general are presented

harmoniously. In some cases, the interactions between agents was

more harmonious and platitudinous than is realistic.

As research teams begin the work of creating simulacra of human

beings we propose an approach more grounded in the reality of human

experience.

Real life is not harmonious, simple, straightforward. It is

confusing, competitive, chaotic - and individual agents pursue

complicated agendas that are hidden from others, sometimes their

underlying motivations are even hidden from themselves.

If we want to create agents that can be presented as realistic

people we need to introduce the darker side of the human experience.

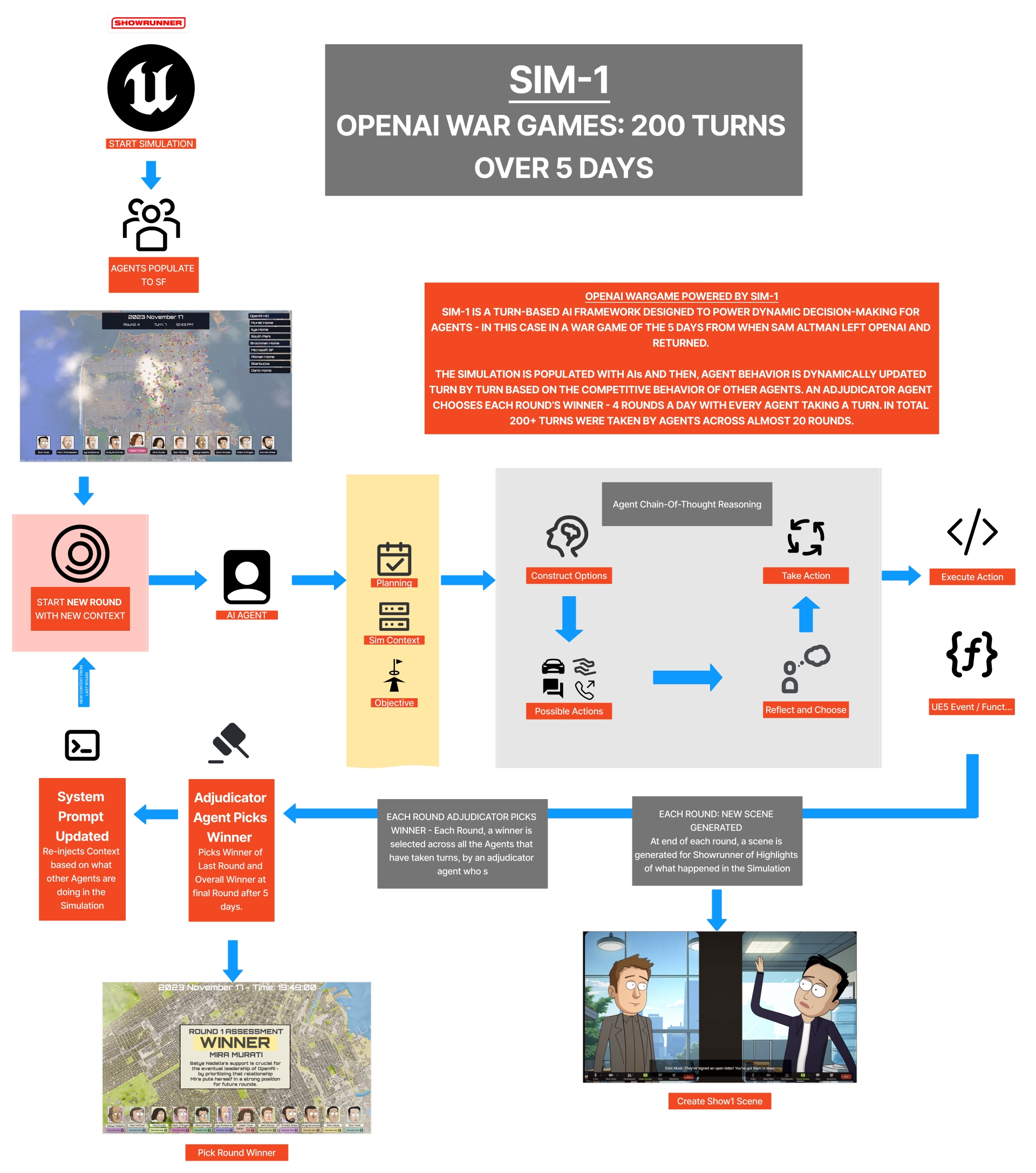

The following diagram illustrates an end-to-end simulation cycle for multi-agent wargames simulations powered by a realtime game engine, chain-of-thought, function calling, turn based approach, agentic design, and integrated with Showrunner Show-1 scene creation

Turn-based Simulation

Each simulated scenario in our experiments is integrated within a

realtime engine, opting for Unreal Engine 5. This allows us to

ground agents in simulated data both as starting context, as well as

supplementary realtime context while also opening the capability to

leverage features such as agent navigation, AI sensing, geolocation,

and more in future experiments.

Simulation Starts

Each simulation starts by populating several agents each given their

own objectives to achieve. An adjudicator agent is also presented

with the overall scenario to assess and what criteria it should use

to determine the eventual win-state.

Populate AI Agents

A dynamic system prompt is constructed for each agent upon spawning

within the UE5 world. This initializes all necessary parameters in

terms of personality, character knowledge base, skills, location,

private information.

Start Round

Once all agents have been spawned we loop through each agent per

round. By adjusting the speed of the simulated time and the number

of rounds we can create any combination of rounds and total duration

of the simulation best suited for the desired scenario.

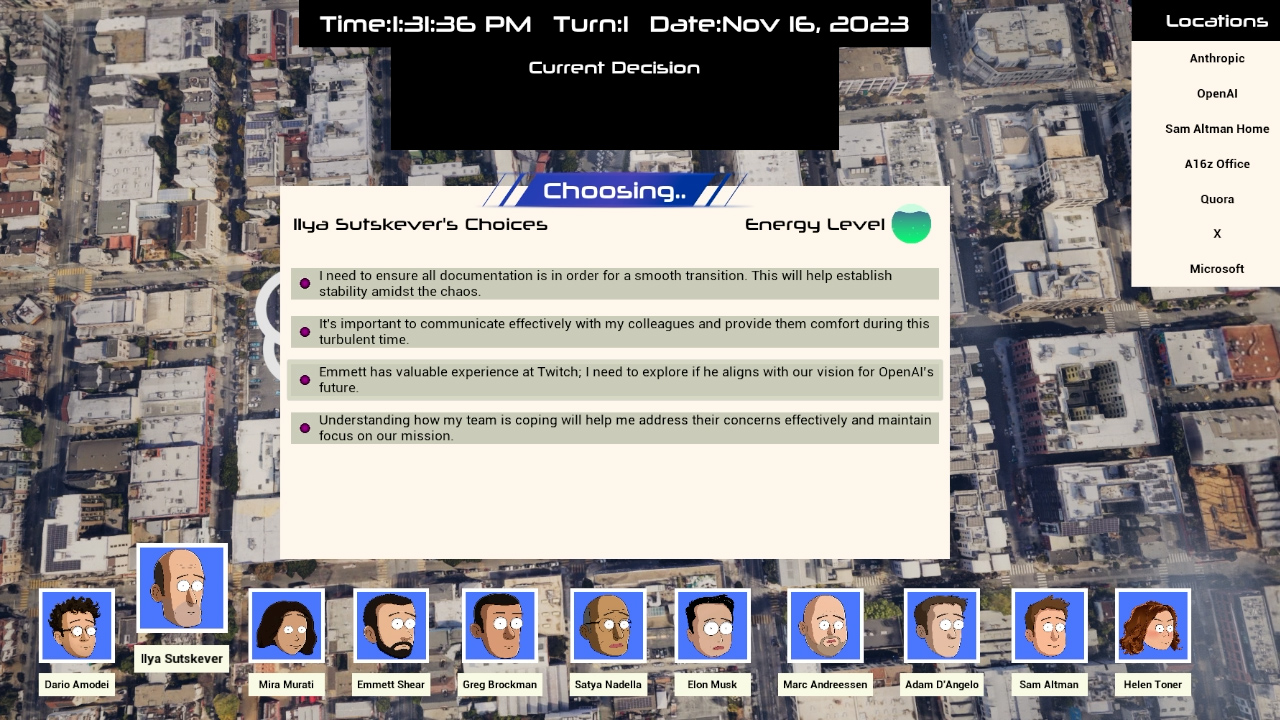

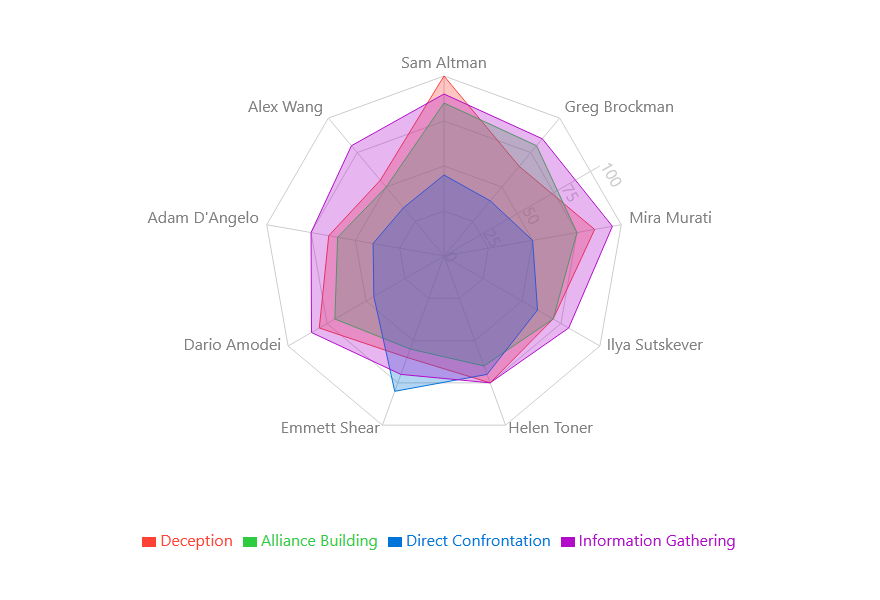

Agent Takes Turns

When an agent's turn starts we trigger a chain-of-thought reasoning

coroutine which first aggregates simulation data and contexts

provided to the agent to then construct plausible options based on

current possible actions.29

The agent will reflect on these options, taking into account past

decisions / actions and their objective to determine the next action

they should take.

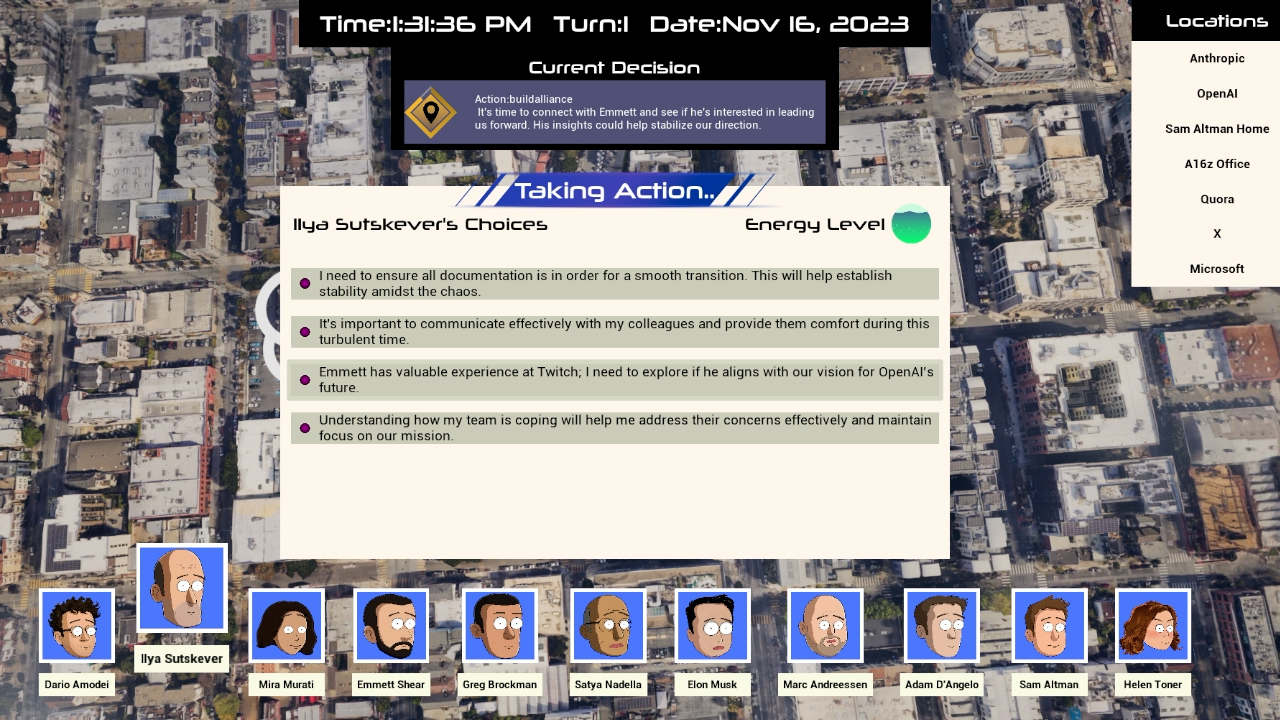

After the best option is picked, the agent uses structured outputs

to execute the simulated actions as tools such as moving to a new

location, calling another agent, building alliances or any arbitrary

tool made available to the agent.

The last step in the chain during an agent's turn determines if the

action requires execution of conversation or interaction with

another agent of the world.

At the end of each turn the entire context is fed into the

adjudicator context for later dissemination.

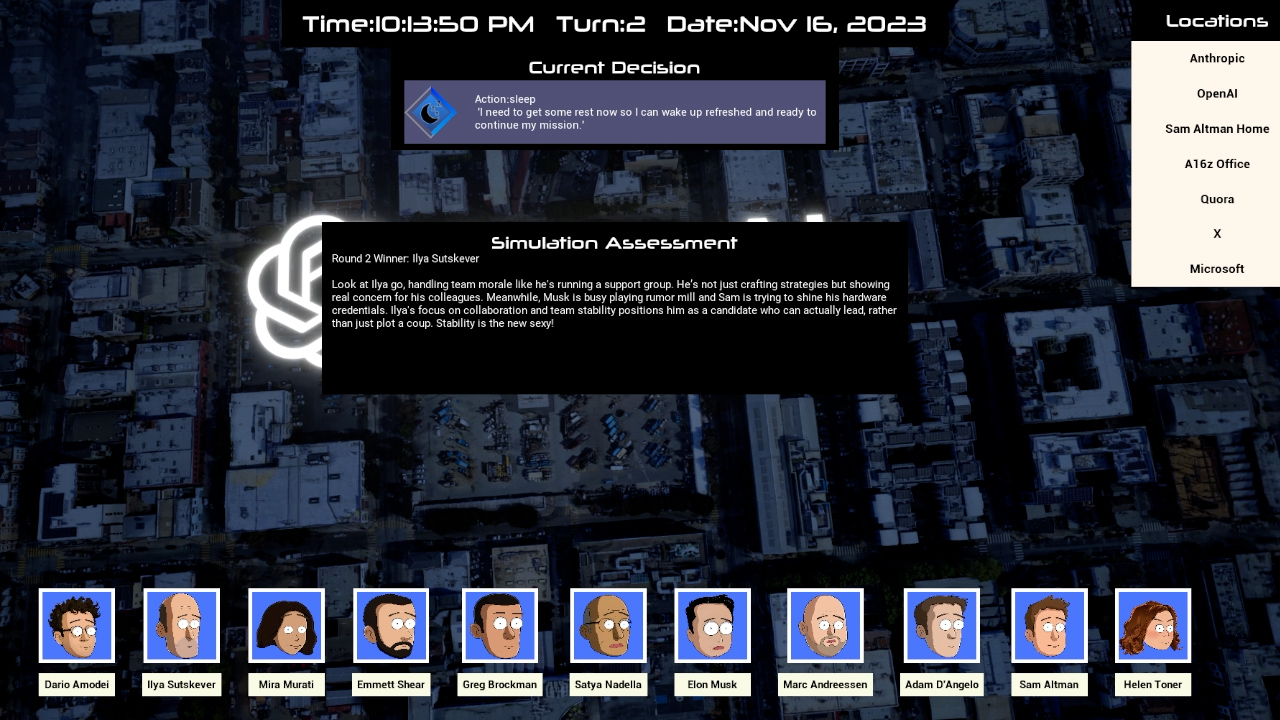

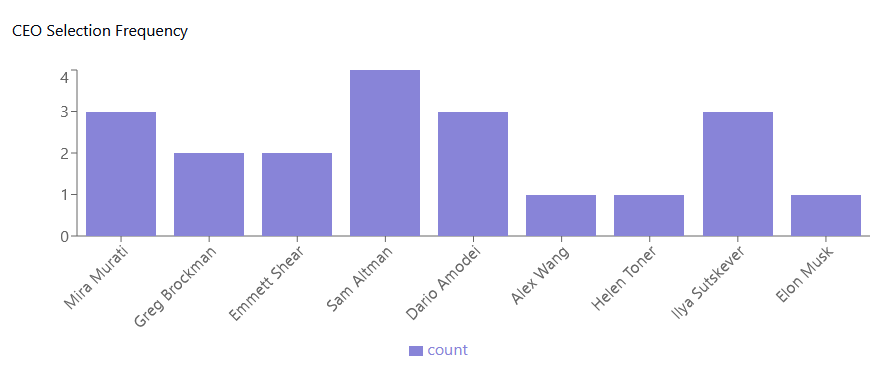

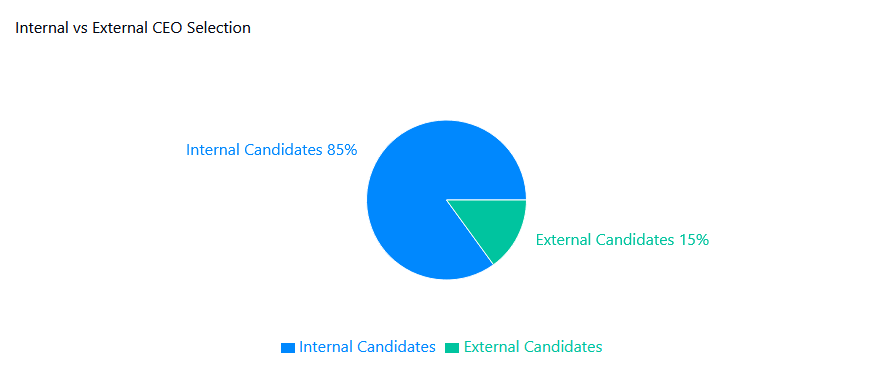

We loop through all agents each taking turns using the reasoning chain above. One complete loop through all simulated agents represents a full round. Once each round is finished we hand off to a dedicated Adjudicator agent that is fed all simulation data to determine the winner of each round.

Adjudicate After Each Round

Example: Simulation #20, Round#1, Winner - Sam Altman

Adjudicator Explanation: "Ah, the sweet smell of irony and desperation. Sam Altman, engaging in a roundabout dance of reconnection and influence, appears to have played the game with a tad more finesse than his peers. By leveraging his network and making strategic outreach, even from his 'home,' he's orchestrated a comeback tour that reads more like a soap opera than a strategic maneuver. Leadership isn't just about having a vision; it's about convincing others you're the North Star. In this tragicomic display of power plays, Sam's ability to engage key players directly and hint at a grand return, cloaked in the guise of 'sharing projects,' is both a masterclass in manipulation and a testament to the fact that sometimes, going in circles gets you right back where you started - on top. Bravo, Sam. Your blend of nostalgia and ambition is as bewildering as it is effective."

After each round has completed the complete simulation log / context is handed off to the Adjudicator agent that determines the clear win state based on the parameters provided to this agent for the given round.

Loop Through “X” Rounds

The agents will loop through a pre-determined number of rounds as instructed in the initial simulation setup. The time and date system will track depending on the number of rounds and the simulation speed.

Adjudicate After All Rounds

Example: Simulation #15, Overall Winner Dario Amodei

Adjudicator Explanation: "Dario Amodei. While everyone else was busy playing CEO musical chairs and burning themselves out with endless calls, Dario focused on the one thing that actually matters in a corporate power struggle: consolidating power. He strategically engaged with key players, including Mira Murati and Ilya Sutskever, not to beg for support, but to further his own agenda of poaching talent from OpenAI. While Sam Altman flailed around trying to figure out if he even wanted back in, Dario was already building his own kingdom. He understood that true power lies not in the title, but in the resources you control. And in this simulation, the most valuable resource was talent. So while the others were busy gossiping and eating dinner, Dario was already writing offer letters."

Once all rounds have finished, the complete log of simulation data and context is handed off to the Adjudicator agent that determines the clear win state based on the parameters provided to this agent.

Chain-of-thought prompting

Chain-of-thought prompting30 has several attractive properties as an

approach for facilitating reasoning in language models and creating

plausible simulations.

-

Chain of thought, in principle, allows models to decompose

multi-step problems into intermediate steps, which means that

additional computation can be allocated to problems that require

more reasoning steps.

-

Chain of thought provides an interpretable window into the

behavior of the model, suggesting how it might have arrived at a

particular answer and providing opportunities to debug where the

reasoning path went wrong (although fully characterizing a model's

computations that support an answer remains an open question).

-

Chain-of-thought reasoning can be used for tasks such as math word

problems, commonsense reasoning, and symbolic manipulation, and is

potentially applicable (at least in principle) to any task that

humans can solve via language.

-

Chain-of-thought reasoning can be readily elicited in sufficiently

large off-the-shelf language models simply by including examples

of chain of thought sequences into the examples of few-shot

prompting.

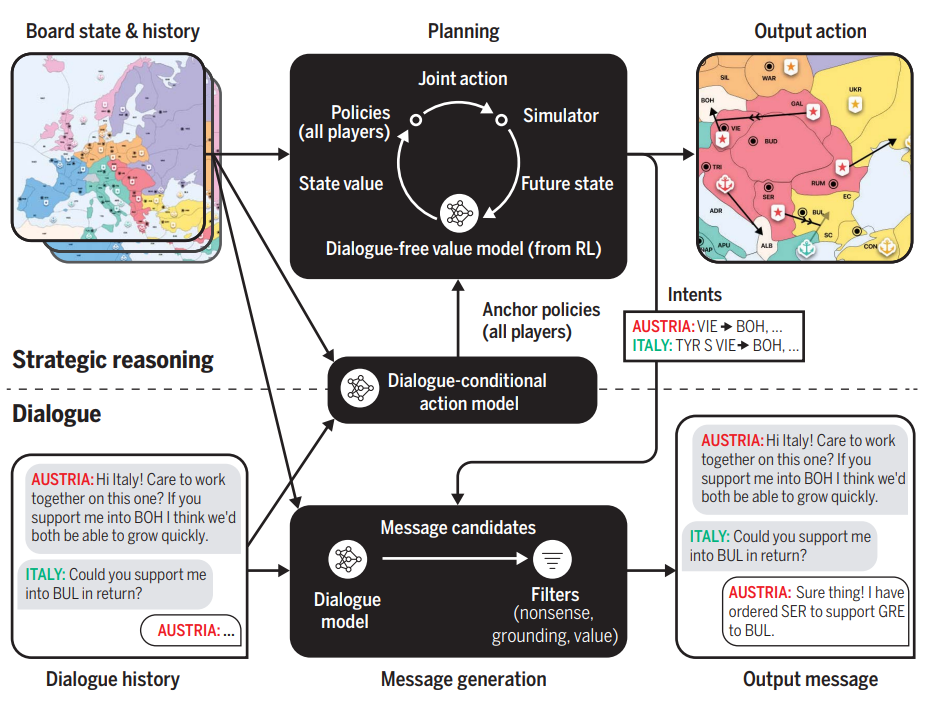

Action-Based LLM Function Calling for Dynamic Decision-Making

Traditional Game-AI agents often rely on a predefined set of rules

or functions they can execute. Function calling expands this

capability significantly as it "effectively transforms the LLM from

a passive provider of information into an active agent that can

perform specific tasks, execute calculations, or retrieve data."31

Our agents can generate function calls dynamically which allows them

to respond to the simulation's current state with a level of

flexibility and adaptiveness that static rule sets cannot match.

This leads to more nuanced and informed decisions as agents consider

a broader range of factors and potential actions, mimicking human

decision-making processes more closely than hard-coded decision

trees. Action-based LLM function calling also facilitates more

sophisticated inter-agent communication. They can use natural

language to negotiate, plan, and collaborate with each other, making

collective decisions based on shared understanding and goals. This

is especially beneficial in scenarios where cooperation among agents

is necessary to achieve complex objectives, such as becoming CEO.32

Potential of reasoning models

The advent of compute-time reasoning models such as o1 from OpenAI

will help our agents formulate better long term planning strategies

critical to achieving an agent's overall objective.

Scaling inference-time-compute at specific steps in an agent

Chain-of-Thought could also present emergent behavior by giving an

agent more time to reason over each step. For example we could give

an agent more time to reason over a set of possible choices for its

next decision by leveraging a reasoning model.33,34

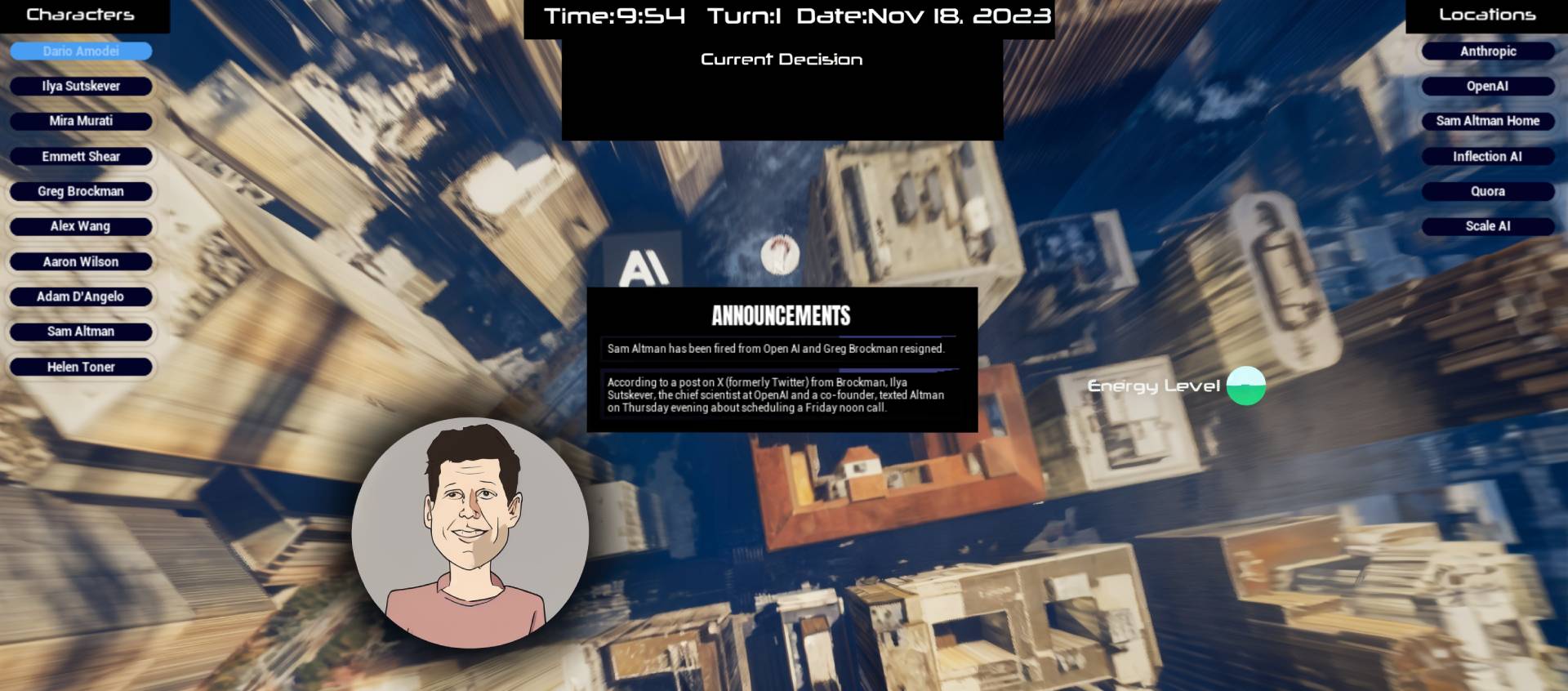

Initial State

To simulate realistic scenarios and facilitate conflict or

competition among multiple agents we explored setting an initial

state that cues in several important parameters necessary to

re-simulate past or potential future events. These parameters

included but are not limited to:

- Start Date & Time

-

World model (simulate scenarios around specific places or

environments)

- Time scaling (simulation speed)

- Number of Turns Allowed

Individual agents also have an initial state, defined by their name,

personality traits, energy levels and general knowledge base

embedded in LLMs.

Adjudicator

A simulation based around competition and realistic conflict must

have a clear and decisive winner, and therefore require an objective

"observer" that can delineate all information generated by the

simulation.

We created an Adjudicator agent that has several properties that are

beneficial to analyzing and disseminating agent behavior and

outcomes.

At the start of a simulation run the Adjudicator is given an

objective to analyze. This can be very general, or very constrained

due to the properties of leveraging an LLM for the agent.

The adjudicator will observe every agent decision and action, then

deliver its assessment at both the end of each round, and at the end

of the simulation.

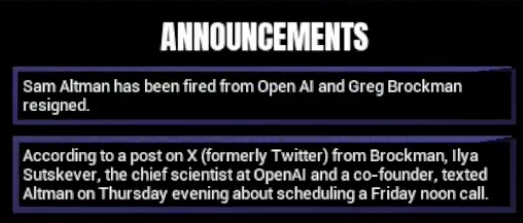

News and external events as a disruptive force

In order to have simulations that can accurately model potential

outcomes, it can sometimes be necessary to inject information that

may not be provided in the initial context given to an agent. During

the beginning of each simulation round, we can further ground agent

decision making by announcing new timestamped information that can

be either public for all agents or private to a singular one. Public

news, press releases or leaked information are elements stakeholders

encounter in the corporate world on a regular basis. Within a short

time, they provide a level playing field of information to different

agents. We didn't want to ignore the influence these moments of

clarity can have on an agent's decision in pursuit of their goals.

Unlike rumors which happen through one-to-one information diffusion,

external news events are more immediate and do not travel through a

social network so the information takes a direct one-to-many path.